Written By: Sudhanshu Dubey, Open Mainframe Project Summer Mentee

In the last blog we talked about the Open Mainframe Project Mentorship in general and its importance. I also mentioned the 4 steps that I will follow for application modernization which are:

- Analyzing the COBOL Application.

- Identifying services candidates.

- Isolating and exposing them as services.

- Connecting them to a new, modern UI.

Today, we will talk about the very first step. While I will be talking in the context of a COBOL application, I believe these steps for code analysis can be applied to application written in any language.

While I was doing my technical training, I had the application code (around 10 files) which made me feel relaxed that it wasn’t too big of an application. I learned later that I had demo of a demo and that the actual application has around 60 files! And all of the files are hundreds of lines long!!

*deep breaths* But this is where a proper method of code analysis is really useful.

To Understand the Code, Think like a User

Be a user before being the developer. It’s essentially reverse engineering an application. Run the application, navigate around it, give inputs, get outputs, give dumb inputs (you know 0 as the denominator to a calculator) and see how the application behaves. This whole process will give you a good understanding of the application and how well it is developed. You can even bring out the software tester in you to really shake up the application and see every possible output it can give.

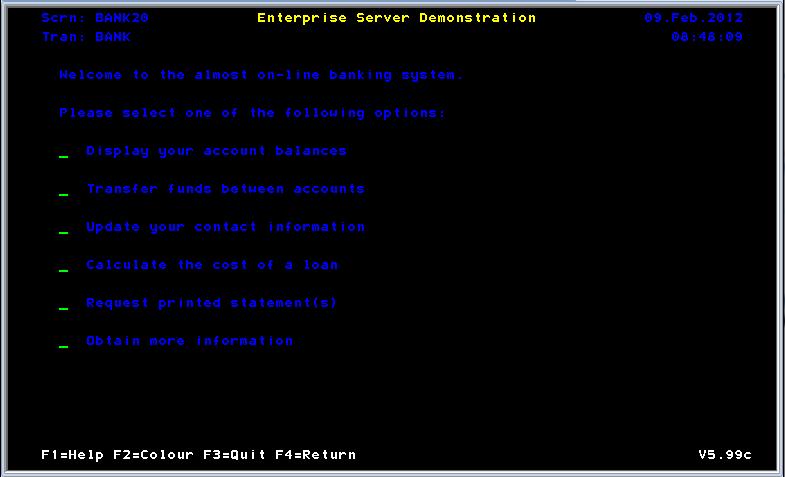

In my case though, COBOL applications aren’t that simple to run. My application had two components: Batch and CICS (Customer Information Control System). And to run both of those components, you need a mainframe. Luckily, I used a Micro Focus Enterprise Server, which is basically software that emulates a mainframe in Windows. It does more but this is our use case here. So with ES, I was able to submit the batch jobs similar to how I did it in Master the Mainframe and get the results. The CICS component is a little more complex though and it was my first encounter with it. You can think of it as the User Interface of the legacy mainframe applications. It looks like this:

CICS screen

So my little experience with ISPF helped me in navigating around these screens where the mouse is not your best friend. These were my conclusions after experiencing the application as a user:

- The batch part by its nature isn’t a candidate for modernization. It does what it does and there is nothing to change there.

- The login module can be made into a service but it will still work as part of other services. It doesn’t makes sense to do authentication without having any authorized tasked to do.

- Help screens present in the CICS application won’t be needed with a modern UI.

Overall, the user experience gave me a feel of the application and showed me what is actually the end result of everything that is going on inside of it.

“I See”

Our eyes help us the most in understanding our surrounding, and similarly visualization of code helps us get a good idea of it. But correct visualization of unknown code is not simple which is why a good tool is required, like an Application Intelligence software. I had Micro Focus Enterprise Analyzer with me and it’s Diagrammer helped a lot. With your software, you should try to generate all kinds of flow diagrams as different diagrams reveal different aspects of the application. The popular data flow diagram tells us which programs perform what operation on which data files. The screen flow diagram shows us which program controls which screen (the UI) and the CICS flow diagram gives us a big and clear picture of what all is going on in the CICS part of the application. Similarly, the job executive report narrates the whole story of the only batch job we have in the application. These are some of the default type of diagrams that we can generate with EA but with some tweaking I can also generate custom diagrams like this this calls and data flow diagram which is a mixture of call maps and data flow diagrams. I can even isolate some files into a separate project to have a more in depth analysis of them. What I am saying is that you should utilize your diagrammer as much as you can because it can tell you things in a way that no one else can.

After staring at these diagrams for some time I conclude the following things:

- The CICS application is divided into three layers: Screen Handling, Business Logic Handling and Data Handling.

- There is also a pattern in the naming convention which relates the program file with its layer. Though there are exceptions to this as some programs are just utility programs and can’t be classified in any layer.

- The screens, screen handling programs and business logic handling programs have similar names if they are part of the same module. This is very helpful.

- The data handling programs work independently so if 2 business layer programs need to do same operation on same data, they call the same data layer program.

Read it to Get it

Finally we are at the stage where we will do what a developer does, which is to read the actual code. Code reading is as essential of a skill as code writing and both kind of go hand in hand as a good written code can be read easily and good code reader writes readable code. Especially from the perspective of open source, where many will read your code, use it and contribute to it. So yeah, level up your variable names, function names, comments and indentation.

But having a readable code is where COBOL excels at. And why not when it was literally built for being readable as it’s mother the Admiral “Amazing Grace” Hopper says this:

“Manipulating symbols was fine for mathematicians but it was no good for data processors who were not symbol manipulators. Very few people are really symbol manipulators. If they are they become professional mathematicians, not data processors. It’s much easier for most people to write an English statement than it is to use symbols. So I decided data processors ought to be able to write their programs in English, and the computers would translate them into machine code. That was the beginning of COBOL, a computer language for data processors. I could say ‘Subtract income tax from pay’ instead of trying to write that in octal code or using all kinds of symbols. COBOL is the major language used today in data processing.”

I read some of the program files but not all since that wasn’t the aim of this code analysis. By this time, I have a very good understanding of the application which I have documented here. This understanding is enough for me to start planning the service candidates about which we will talk in the next blog.

Till then, I will leave you with an invite to the COBOL Mentorship session at Open Mainframe Summit, being held virtually on September 22-23. My colleagues in the COBOL Mentorship will be giving a final presentation on Thursday, September 23 at 10 am PDT/1 pm EDT. Register here: https://events.linuxfoundation.org/open-mainframe-summit/register/.